What You’ll Find This Week

HELLO {{ FNAME | INNOVATOR }}!

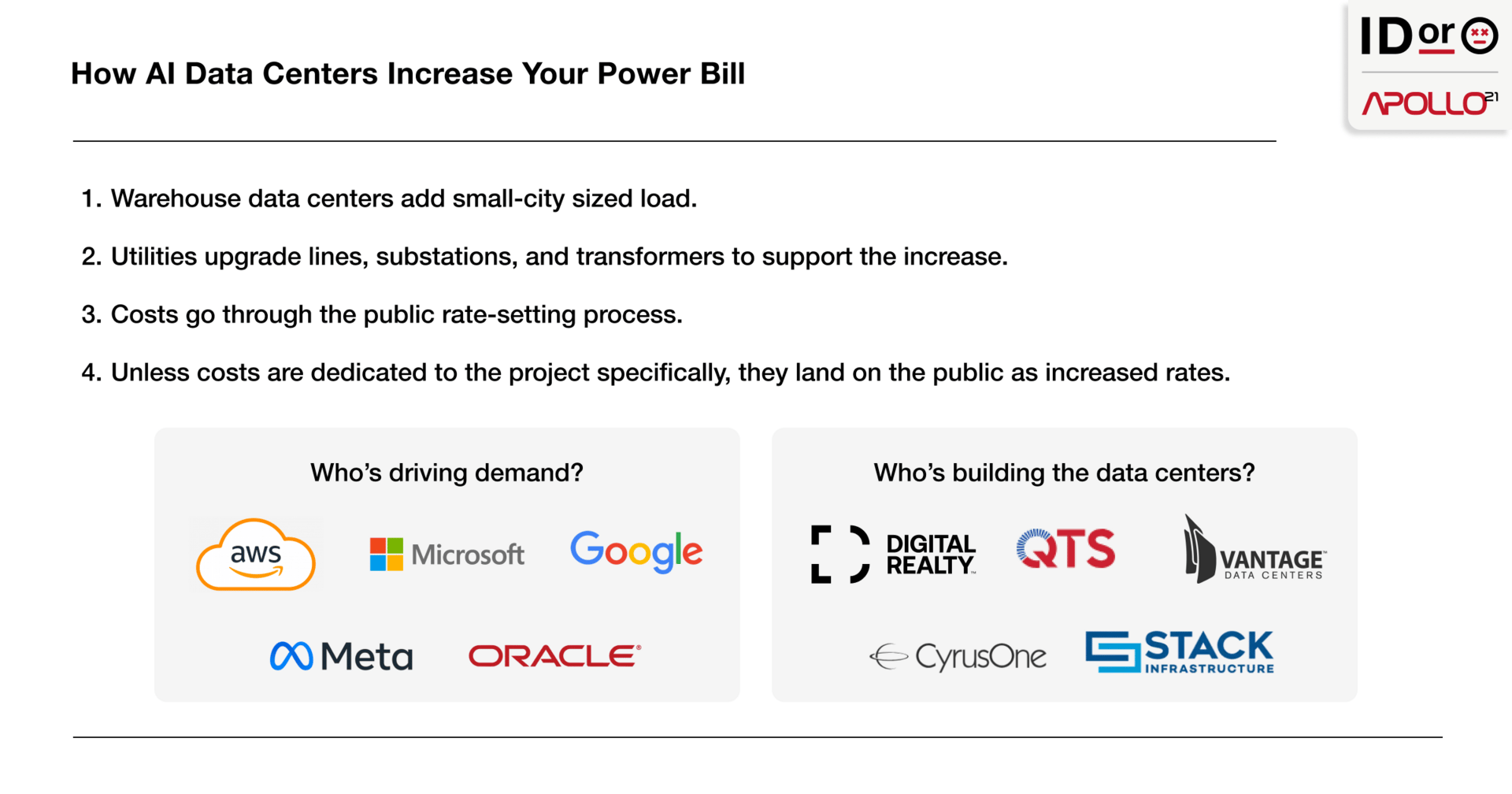

Conversations about AI are everywhere today, from the board meetings of F500s to the table next to you at Starbucks. But most of those conversations are focused on simple use cases, or perhaps for the nerdier tech crowd, which is your favorite frontier model. But a deeper, more widely impactful conversation is beginning to come to the surface: who’s footing the bill for increasing compute costs. Specifically, the energy bill.

This week, I’m taking a look at how the biggest innovation since the internet is impacting folks who have never even used Claude or ChatGPT. If you’re worried about how AI is going to increase your monthly energy bill, this week’s article breaks down the flow of how a simple prompt drives up the cost of consumption for all of us.

Here’s what you’ll find:

This Week’s Article: AI Isn't a Model Race. It's a Power Grab.

Share This: How AI Data Centers Increase Your Power Bill

Don’t Miss Our Latest Podcast

This Week’s Article

AI Isn't a Model Race. It's a Power Grab.

Most people think of AI progress in terms of model quality, prompts, or GPUs. That’s where most of the conversation is centered.

The real bottleneck, however, is power you can actually secure. Power with a delivery date, an interconnection path, and a permitting plan that survives contact with the public.

We call this power entitlement.

In real estate, “entitlements” are the approvals that make a site buildable.

In AI infrastructure, power entitlement is the right to pull massive electricity from a specific grid, in a specific place, on a specific timeline, without getting killed by interconnection delays, permitting fights, or equipment lead times.

Guy Massey captured the vibe with “Aspirations. Expectations. Limitations.”

Every CFO said the same thing last quarter:

"We're behind. Demand is increasing."

They expect grids to expand.

They expect permits to clear.

They expect supply chains to deliver.

They expect the world to keep pace. It won't.

The model debate, in spite of all its noise, is just a sideshow. The real battle is for the megawatts that power the massive compute necessary to summarize countless emails.

Whoever locks power entitlement first sets the pace. Everyone else rents capacity and hopes for the best.

AI is turning power access into strategy. Strategy is turning into a land rush.

Why This Is a Land Rush, Not a Model Race

Model advantages get competed away. Entitlements don’t.

You can rent intelligence. You can’t rent megawatts that aren’t there.

So the scarce “land” in AI isn’t dirt. It’s power entitlement. Interconnection position. Substation headroom. Transformer slots. Permits. Water. Local permission.

How the Land Rush Actually Works

A scarce input becomes the moat.

The biggest players lock up capacity first. Interconnection positions. Substation headroom. Transformer slots. Water access. Permits.

People in infrastructure have a name for this pattern: constraint capture (a ‘cornered resource’ play in strategy language).

Once it starts, the downside shows up somewhere else.

Higher bills are the obvious one. But the quieter ones hit first. Interconnection queues get longer, so everyone else waits too: solar and storage projects, factory expansions, grid reliability upgrades, and so on.

Reviews get tougher because regulators and grid operators start asking the tough questions about who should pay for upgrades and who’s the first to get shut off at peak load. That turns into more studies, more collateral, and more curtailment language in contracts.

Once people make the mental connection between data centers and higher bills, water stress, and diesel backup noise, local opposition gets louder. Permits slow down or die.

This pattern is being driven by companies you rely on every day:

AWS

Microsoft

Google

Meta

Oracle

And it’s being executed, at ground level, by the data center landlords building the warehouses and negotiating the power:

Digital Realty

QTS

Vantage

CyrusOne

STACK

They can see the same constraints everyone else can see, and they can see the downstream costs too. And still, the pursuit of power keeps accelerating because the incentives are stronger than the downsides…for the corporations.

Call it malicious or call it rational capitalism. Either way, it’s deliberate in practice because Jeff, Satya, Sundar, Mark, and Larry choose not to stop.

The Land Rush Is Real

The International Energy Agency projects global electricity use from data centers will more than double to around 945 TWh by 2030 in its Base Case, with AI as the biggest driver of the growth.

In the US, power comes with a waitlist.

Berkeley Lab tracks the interconnection queue, which is the line of proposed power plants and large batteries waiting for permission to connect.

Before anything can turn on, grid operators have to study whether local wires and equipment can handle it and then build whatever upgrades are required, often bigger lines, expanded substations, and new transformers.

As of the end of 2024, proposed power plants and batteries totaling nearly 2,300 GW were in line to connect.

That number doesn't mean it all gets built. But it does demonstrate how clogged the system is, and why everyone’s fighting for position.

How Innovation Starts Doing Harm

Innovation does harm when the winners get access to power while we all foot the bill.

A hyperscaler shows up and asks for the kind of electricity a small city uses. The grid can’t just add more. It has to build upgrades: bigger transmission lines, expanded substations, new transformers, and new protection equipment to keep the system stable.

Those upgrades take time and money. The time shows up as waitlists and delays for everyone else who wants to connect anything new, from solar farms to batteries to factories. The money shows up in the utility’s public rate-setting process, where regulators decide whether costs land on the project or get spread across consumers.

Power shows up, upgrades follow, and the invoice has to land somewhere. Now watch what happens when “we’re behind” hits a grid that can’t move faster.

“Behind” Turns Into “Build It Now”

Hyperscalers, and the data center landlords feeding them, are acting like timelines are negotiable. They aren’t. The grid moves at the speed of studies, steel, and permits.

When load shows up faster than the grid can respond, utilities and grid operators end up in a corner: slow down connections, or build upgrades fast.

Both are painful.

One is painful for the data center.

The other is painful for everyone.

Cost Allocation Decides Who Gets Hurt

Every AI megawatt has a bill behind it: substations, feeders, transmission upgrades, switching gear, backup procurement, and capacity obligations.

The question is simple:

Who pays?

If upgrades are recovered through the general rate base, households pay part of the buildout through higher bills. If upgrades are forced back onto the project, those projects slow down, shrink, or move.

This is also where the hyperscalers and the landlords negotiate the fine print that matters for the future. Upgrade responsibility. Upfront collateral. Curtailment terms. Who eats overruns. Who gets priority.

Wired reports residential electricity rates have risen more than 30% on average since 2020, but the biggest drivers have been the usual suspects: fuel costs and grid spending.

AI isn’t the whole story yet. But in the places where data centers concentrate, it starts to show up faster, and it turns into a political fight.

Backlash Becomes a Constraint Too

Once households feel the cost, permitting gets harder. Regulators tighten rules. Grid operators start enforcing curtailment terms, contractual obligations that allow power providers to limit supply in times of overwhelming need.

In January 2026, Reuters reported PJM’s (who handles power for much of eastern seaboard) “connect and manage” direction, where new large power users may need to bring their own power or accept earlier curtailment.

PJM’s own board summary uses the same language.

So the loop looks like this:

Big load arrives.

Upgrades get socialized or volatility spreads.

Bills rise and reliability fears spike.

Backlash hits.

Permitting and connection terms tighten.

Everyone slows down.

If you're a two-person AI startup, none of this touches you directly. But you, like the rest of us, are beholden to the likes of AWS, Microsoft, Google, Meta, and Oracle, plus the data center landlords building the warehouses for them, companies like Equinix, Digital Realty, QTS, Vantage, CyrusOne, and STACK, who are building warehouse-sized data centers that consume power like small metropolises. The rarefied few have the power to demand entitlement. The rest of us just foot the bill.

The Argument Everyone Is Missing

The loudest AI conversation is still a nerd fight.

Which model is best.

Which benchmark matters.

Who has the smartest chatbot this week.

Meanwhile, the real bottleneck is showing up in places normal people actually feel. Not in tokens. In megawatts.

While the internet debates frontier models, a rarefied few are locking up small-city power for warehouse-sized data centers. Utilities are rebuilding parts of the grid to keep up. Regulators are deciding how the costs get allocated.

If those costs get spread broadly, you don’t get a better model.

You get a higher bill.

Share This